Preface

During my work on dockOvpn project I was wondering, if I could use nested Docker containers to have all-in-one mega service capable of acting as OpenVPN server as well as a client. That time I decided to go for single feature container, because I didn’t want to overload it with functionality and, at the same time, I aimed to keep it as simple as possible for the end user. I also used to employ my own building pipeline to deliver docker images and I got to learn that my $5 Digital Ocean machine was getting stuck from time to time, because all of its available disk space was eaten up by generated docker images. The irony was that all of these artefacts were transient and needed for the purpose of building the main artefact only. I’d be happy to get them displaced as soon as the main artefact was built. I tried to use complicated shell scripts but that approach was error prone and I kept getting my server stuck anyway. That was the first time I though how cool it’d be, if I could generate intermediary artefacts and keep them entirely within builder container and, once it stopped, all that garbage gets removed.

DinD, the convoluted way

Before I began my experiments I had a chance to read this brilliant atricle, which strictly discouraged using Docker the way I was going to. Needless to say, it didn’t stop me and I decided to go on. I started from the scratch, i.e from official Docker-in-Docker image. From my understanding, it was intended to be used the standard way, namely to work with bind-mounted docker deamon socket from the host -v

/var/run/docker.sock:/var/run/docker.sock. They also have a dind version (tagged with dind postfix) with some lack of documentation though. For the sake of self-education as well as to get a more lite and simplified version I started playing around with a regular Docker version. I used the following steps to get working solution:

-

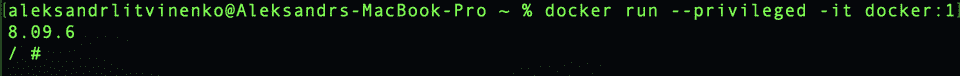

Lets’s run DinD the old fashioned way:

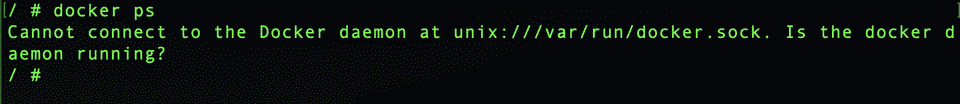

docker run --privileged -it docker:18.09.6 - You’ll be surprised, but the docker deamon isn’t running and the attempt to call

docker psresults in:

-

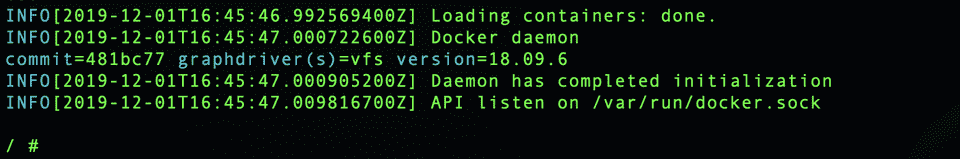

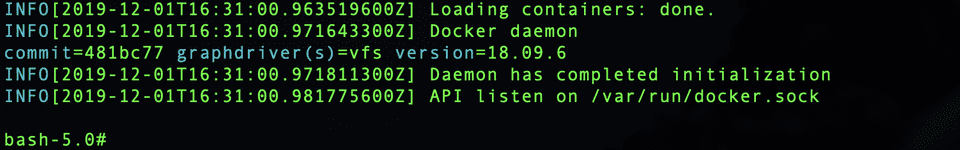

Not a big deal, lets launch it ourselves:

dockerd -

Now, we can try running dockerd again. But this time lets run it in a separate shell so we can keep entering commands in the terminal:

dockerd &

You can see this and other experiments on my Youtube channel:

DinD, the easy way

The approach we described above has one major flaw: it’s hard to base real world services on it, since if anything crashes, we will have to start everything all over again and we can’t run our DinD recursively.

Let’s containerise our solution. To keep long story short, I already did it, you can go and inspect this project. Its Dockerfile is almost identical to what we did above.

Experiment 1. Running two child containers within DinD

This one is quite simple. We’re going to launch MySQL and very simplistic Nodejs app within our DinD container. It takes just a few steps:

-

We start DinD:

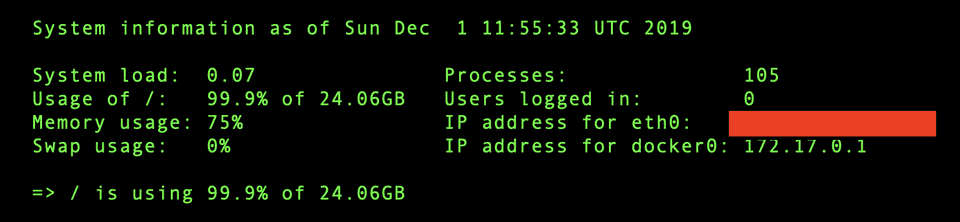

docker run --privileged -it \ -p 80:8080 \ -p 3306:3306 \ alekslitvinenk/dindYou’ll see:

-

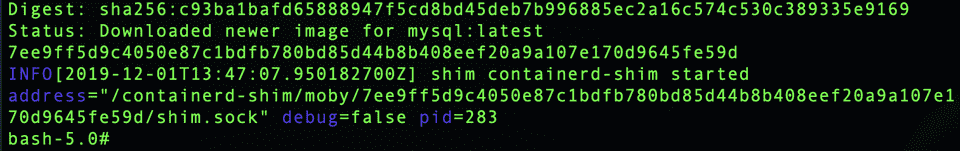

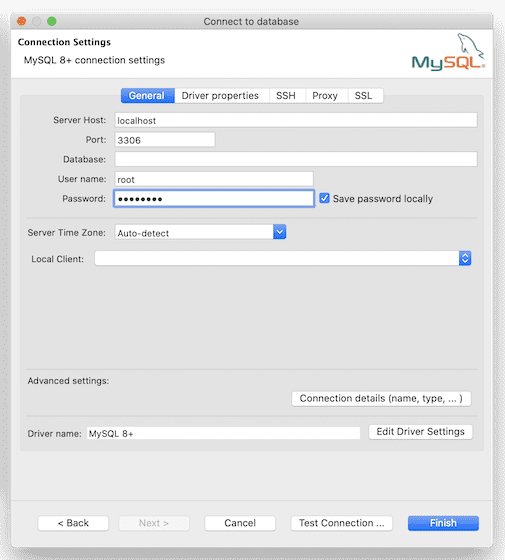

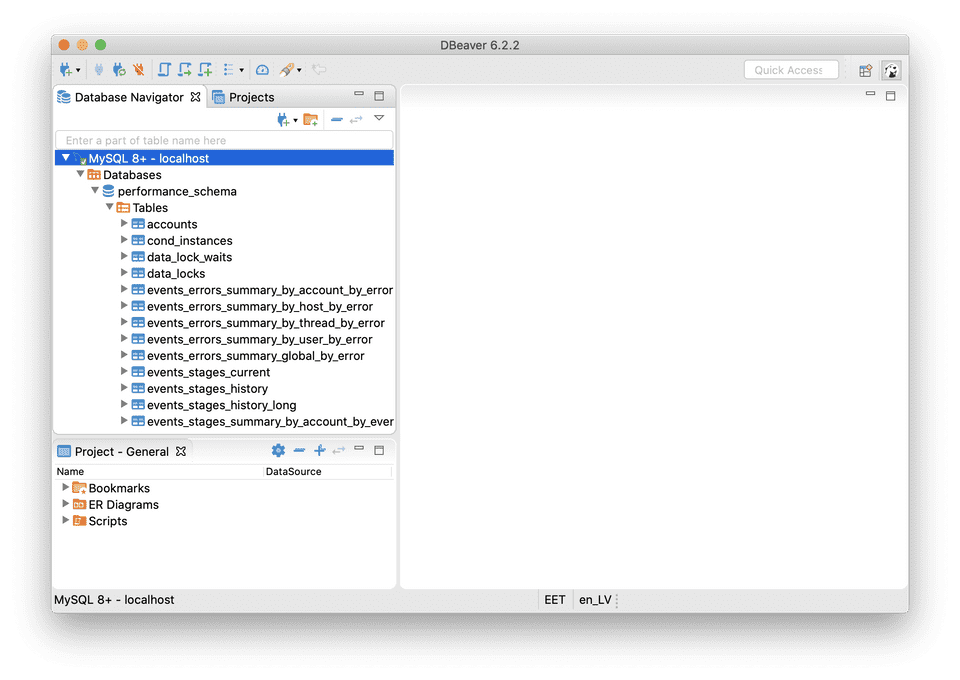

Having this container up and running, now let’s run MySQL container. Copy’n’Paste the following code into bash shell of the DinD container:

docker run --name mysql -e MYSQL_ROOT_PASSWORD=password -d -p 3306:3306 mysqlNote: please, never use password password as your real password. It’s okay only for this tutorial.

- If we did everything right, you should see the following output in your console:

-

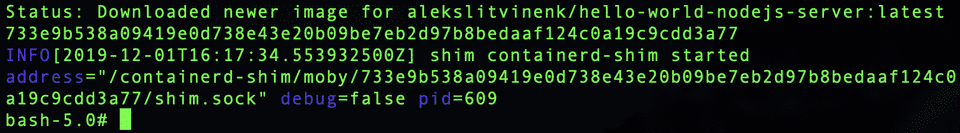

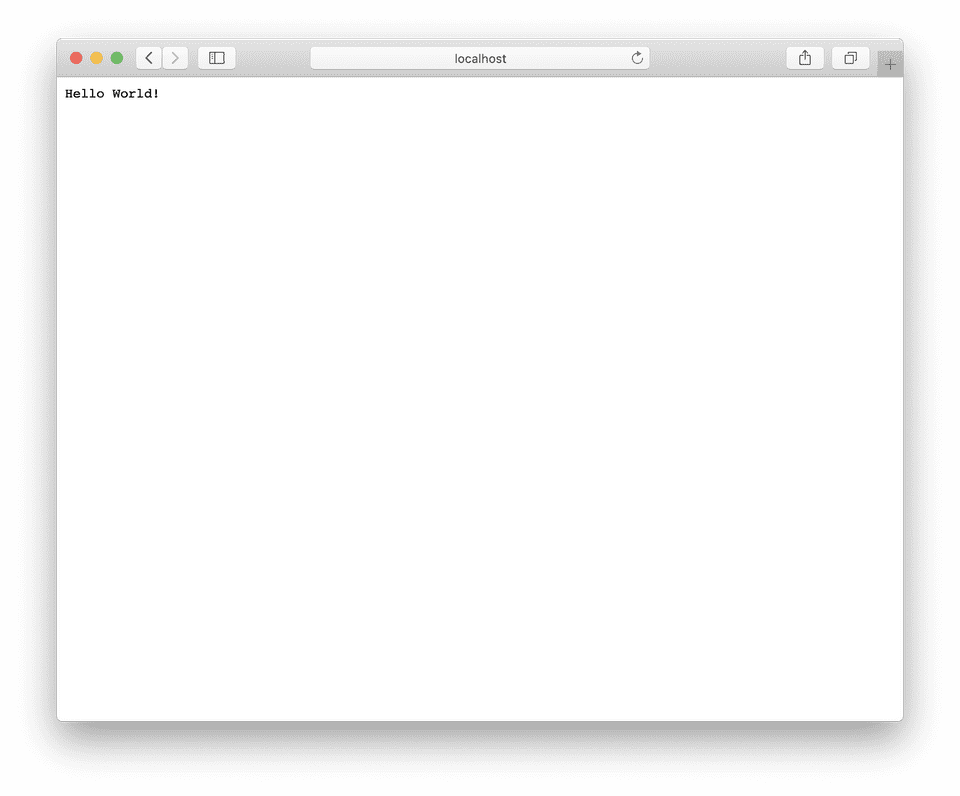

The next step we will launch a simple Nodejs app as a sibling to our MySQL container. Solely for this article I created an App that returns “Hello World!” string whenever it’s accessed via HTTP. Let’s run it:

docker run -d --rm -p 8080:8080 alekslitvinenk/hello-world-nodejs-server - If we now go to localhost in our browser, we’ll see:

Conclusion: We just ran 2 docker apps in another docker container and we can dispose these images altogether with their containers by simply stopping (and removing )parent container! You can see this experiment on YouTube as well:

Experiment 2. Recursive Docker in Docker

This is a bit less practical, but nonetheless very interesting from scientific point of view, application of DinD. How deep can we get running DinD recursively? Let’s do the following experiment:

-

In your terminal enter:

DIND_DEPTH=0Lets 0 be the host level depth.

-

Run DinD with DIND_DEPTH environment variable:

docker run — privileged -it \ -e DIND_DEPTH=$((DIND_DEPTH+1)) \ alekslitvinenk/dindHere we capture current DIND_DEPTH value and calculate and increased by 1 value to pass it on to the inner container.

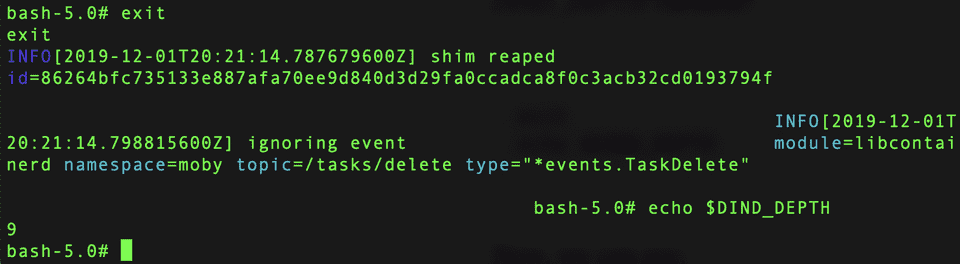

- Let’s repeat the previous step 9 times.

Notice that from now on, every time you paste the above code and hit Enter, DinD image gets pulled over and over again. This is happening because every time we launch DinD we create a totally new docker images and container store which is empty and doesn’t contain any images ad containers you ran on the upper (outer) level. -

Let’s check our container local DIND_DEPTH variable:

echo $DIND_DEPTH

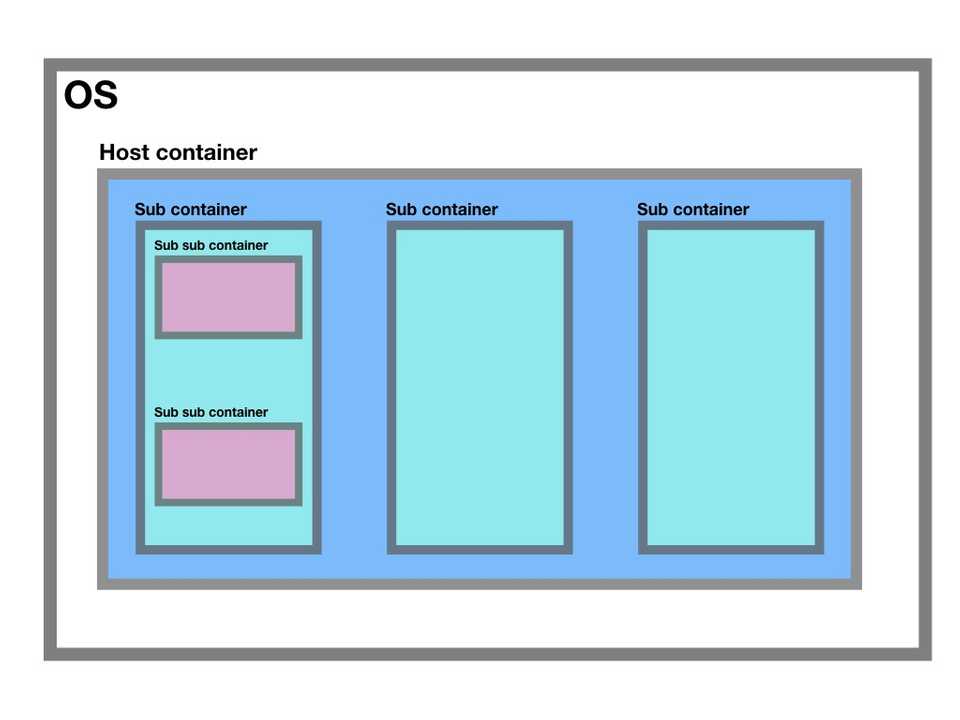

So, we can assume our containers structure is as the following:

DIND_DEPTH=0 (HOST)

— DIND_DEPTH=1 (CONTAINER)

— — DIND_DEPTH=2 (CONTAINER)

— — — DIND_DEPTH=3 (CONTAINER)

— — — — DIND_DEPTH=4 (CONTAINER)

— — — — — DIND_DEPTH=5 (CONTAINER)

— — — — — — DIND_DEPTH=6 (CONTAINER)

— — — — — — — DIND_DEPTH=7 (CONTAINER)

— — — — — — — — DIND_DEPTH=8 (CONTAINER)

— — — — — — — — — DIND_DEPTH=9 (CONTAINER)

— — — — — — — — — — DIND_DEPTH=10 (CONTAINER)Let’s check it’s true. We will be quitting container one by one and check our DIND_DEPTH variable:

exit

echo $DIND_DEPTH

8

7

6

5

4

3

2

1It’s remarkable!!!

In theory, we could construct a very complex tree-like containerised application, but this exercise goes far beyond the purpose of this article. If you’re interested in this experiment — stay tuned, I’m going to publish a new video on my YouTube channel!

Summary

Docker in Docker is definitely a way to go, should you need to boost your staging environment or create a self-cleaning CICD pipeline. In many cases DinD may prove to be much more convenient than Kubernetes and Jenkins X. But it comes with a few gotchas, to name but a few: bind-mounting directories inside you inner containers might not work as you expect (it might not work at all) (if you are interested — subscribe, I’ll cover this topic later); it’s still a purely research project, i.e everything you do with DinD comes with absolutely no warranty. I’d say if you’re a very small company or an individual — i is a totally viable solution. But as you grow you might want to consider more reliable and more industry standard solutions.